In Hollywood movies, visual effects (or “VFX” for short) are a huge part of the storytelling. A lot of the time, it doesn’t matter how well written the script is, if the special effects are not convincing enough, the audience won’t believe it.

There’s even a special Oscar® category just for them. But what about those visual effects shots—how do they work?

If you’re a fan of movies, chances are you’ve seen some really awesome visual effects. These are the special effects and computer-generated imagery that make movies like Star Wars, Avatar, and Titanic so great. Now, there’s a whole new wave of tools that allow for even more exciting and engaging storytelling.

As we all know, VFX is an expensive process and takes years to create. It’s also incredibly hard work. There are over a thousand different VFX artists and engineers working on each Hollywood blockbuster movie.

VFX (Visual Effects) 101

Visual effects are an essential element in movie production, especially Hollywood blockbusters. They are a key part of today’s films, whether it’s for a Hollywood blockbuster or a television series.

They can give viewers a better understanding of the story being told and allow filmmakers to add a sense of scale to the experience. Visual effects are a key part of today’s films, whether it’s for a Hollywood blockbuster or a television series.

While visual effects have been used in film since its inception, the recent explosion in popularity and development of computer-generated imagery (CGI) has revolutionized how visual effects are created.

As a result, many visual effects artists now specialize in creating visual effects using CGI rather than traditional techniques such as stop motion, puppetry, claymation, etc.

The most common use of visual effects is in the creation of special effects such as explosions, fire, creature creation and the destruction of objects to name a few. If the director can think it up in his or her mind, VFX artists can bring it into reality.

History of Visual Effects in movies

The history of visual effects in film can be traced back to a French inventor named Louis Le Prince. His invention, an automated stage for motion pictures, was the first ever movie camera.

In 1902, Georges Méliès, a French inventor, began the first known use of stop-motion animation. In 1908, he introduced the first known use of a “double exposure” technique in film. In 1927, the process was further refined with the introduction of the first practical optical printer, which allowed for the creation of three-dimensional images.

The 1930s saw the introduction of a new technology, optical compositing, which allowed for the production of the illusion of depth in a two-dimensional image.

This became the standard for all visual effects in Hollywood films until the 1950s, when computer technology made it possible to create special effects that were previously impossible or impractical.

By the 1960s, these techniques became commonplace, and visual effects began to play a major role in the production of feature films. The 1970s ushered in a new era of visual effects. The advent of digital technology allowed for the creation of more realistic special effects.

This led to the development of computer graphics, or CGI, which is now a significant part of the visual effects industry. The 1980s brought with it another technological revolution: the introduction of the digital camera.

This gave rise to an entirely new genre of visual effects, known as “digital compositing,” which combined digital image manipulation with traditional visual effects. In the 1990s, the “look” of visual effects was greatly refined through the use of digital technology.

This resulted in the development of photorealistic visual effects (or PFX) which, in turn, inspired a new generation of visual effects artists. The new wave of visual effects artists came from backgrounds in film, animation, television, video games, and digital media.

The 2000s have seen a new round of technological advances. Digital photography has advanced to the point that real-time digital compositing is possible, making it possible to integrate live-action footage into CG-generated images.

What is a VFX Pipeline?

The VFX pipeline involves every stage of a movie’s production and post-production. Let’s go down the rabbit-hole as we explain the steps of the VFX pipeline in more detail.

Storyboarding and Animatics

Storyboarding and Animatics in movies is a process where the script is broken down into individual scenes and each scene is then animated. This process allows vfx artsits and animators to see how each element of the scene will look and move onscreen, before all of it is assembled and put together in the final cut of the movie.

The same method can be used to help you visualize the various sections of your content.

What is Pre-Vis?

Pre-vis is a process where visual artists will begin to draw out storyboards of scenes before a movie is even shot. This serves to help directors visualize what they want to achieve visually.

This process helps the director stay focused on the overall story while giving him or her a chance to refine it. Pre-vis is a very important step because it allows the director to see what the finished movie is going to look like before they shoot the actual movie.

This helps the director keep his or her eye on the big picture while the visual artist keeps his or her eye on the details.

Film director Alfred Hitchcock famously loved to storyboard ever single frame of his films. He has so much confidence in the pre-vis process that he rarely ever looked through the camera, he would just have his cinematographer follow his storyboards to frame the scenes.

Concept and Design Process

Concept art and design are two different aspects of the process, and sometimes they’re used interchangeably. When we think about concept art, we imagine images from movies, video games, or comics.

In fact, a concept art piece can be something as simple as a sketch on a napkin, to an elaborate rendering on paper or canvas. While concept art is typically not directly tied to the final design, it’s a crucial component of the entire creative process.

The movie director or designer may use a concept artist to help guide them in their artistic vision. The designer’s job is to bring those images to life. This is an important stage of the process because it often takes a few iterations to get to the final design.

Ralph McQuarrie was a famous concept artist for the Star Wars franchise who created a number of highly-recognized concept images, including the Death Star, Yoda’s home, and Darth Vader’s helmet.

His style can be seen in many of the most recognizable images of the original Star Wars trilogy. In addition, his work can be found throughout Disney history as well, including a lot of concept art from the Indiana Jones and James Bond films.

What the Heck is Matchmove and Camera Tracking?

Matchmove is an important tool in the VFX artists toolkit. While it was initially designed to match the movements of actors against a green screen background, its use has expanded greatly and can be used to create all sorts of amazing effects in post-production.

Matchmove is a software tool that matches the movements of an actor with a background image. It’s most commonly used for green screen effects, but it can be used in many other ways.

Matchmove works by using the information from a green screen tracking camera and combining it with the image from the source footage (usually a video or photo). The software will try to figure out where the actor is in relation to the background and create a composite image with their movements.

As for Camera Tracking, it’s a process of creating a virtual camera that follows a real camera around while capturing video or photographs. It’s often used to create the effect where the camera is actually moving inside a scene.

Layout and Production Design

One of the key aspects of film production is the art of layout and production design. With such a large amount of information being presented to the audience, the director, editor, producers and other staff must ensure that all elements are aligned and fit together in a way that is pleasing to the eye.

The process begins with the production design of the overall look and feel of the movie, which should be inspired by the director’s vision. After the design is completed, it’s time to lay out the script and the scene in terms of visual elements: camera angles, lighting, set dressing, etc.

The layout stage continues with the development of each shot, including editing, color correction, compositing, and any special effects required for the film.

What is Asset Creation and Modeling?

“Modeling” refers to the creation of a digital version of your real-world object that will be used to replace that object in the final product. The digital version of the object needs to be very realistic and detailed.

For example, if you’re creating a car in a movie and you need to model the body of the car in order to replace the real-world body in the final product, the body of the car needs to be 100% accurate. The same applies to modeling any other physical object in the movie.

If you’re making a video game or animated movie, the more accurate your models are, the better the final product will look. In addition to modeling, you also need to create digital versions of all the elements that go into your real-world objects.

For example, you need to create digital versions of the wheels, tires, lights, engine, etc. These items are called “assets” and they need to be created with the same level of detail as your models. You can have assets of any type: 2D images, 3D models, textures, sounds, animations, etc.

In the film industry, R&D refers to the process of development and production of visual effects and motion picture animation. Most often used in the context of feature films, the term “visual effects” includes the processes of creating the final composite of a set piece such as the background or foreground of a shot, the 3D models and animation for a set, matte paintings, special effects, optical effects, and more.

Motion picture animation involves creating the visual effects and motion for a motion picture.

R&D is not restricted to film; it can also include computer animation. The process of motion picture animation begins with a storyboard. Storyboards are the preliminary drawings that visualize a scene from start to finish, showing all of the actions and camera angles for a sequence of scenes.

The storyboard is then used as a guide for the design and creation of a three-dimensional model or animated figure. A director, producer, and other members of the creative team use these tools to come up with the visual style and theme of a motion picture.

A common problem in visual effects is called “rigging”. A “rig” is a complicated device that controls, moves, rotates, or otherwise manipulates a character or object in the virtual world of a movie, video game, etc.

This is usually done using a computer program. To the untrained eye, the end result might look as though it was created by magic. However, many animators and artists spend weeks, months, or even years learning how to rig a character or object.

So why does a rigger do what they do? If you have ever watched a movie where there are visual effects and you noticed how unrealistic something looked, that’s because it was rigged.

When a character walks, you notice how their legs are moving in a way that doesn’t make sense, but a rigging expert can do that for you.

What is Animation?

When we animate things in movies, it’s usually a sign of something special going on in the movie. For example, when someone flies off the roof of a building and falls to his death, that’s a pretty dramatic moment.

If you think about it, when a character flies off the roof of a building and falls to his or her death, it’s actually quite realistic because we don’t see it very often. And yet when it happens, it’s an incredibly dramatic moment and makes people stop and watch.

It’s similar to how many of us are affected by a sudden burst of laughter or a funny story. It’s an instant response that draws us in.

That’s what animation is all about: drawing us into your story. We’re interested in how a character responds to something, and it’s fun to see how things develop. Animation is the art of drawing pictures to make a story happen. It’s not limited to movies or TV shows, but it’s probably the most well-known form of animation.

It’s also one of the oldest forms of animation. It’s been around for more than 100 years. In the 1920s, animation was very different than it is today. There were no computers, no special effects, and no special characters. In fact, when animation began, it was pretty crude.

It looked like this: Nowadays, we can do so much more with animation. We can have special effects, 3D environments, and animated characters.

FX and Simulation

What’s the difference between simulation and FX? While both are used to create the look of the movie world, they are slightly different in how they are used. FX is used to create a realistic look to the scenes.

You see, the things that you see on film, such as explosions, fires, etc., were all simulated, which means they were created digitally in the computer. Simulation, on the other hand, can be used to create almost anything.

It can be used to create an environment, a landscape, or even an object. If you have ever seen the movie Avatar, you know exactly what I’m talking about. The movie’s environment was simulated because it was so realistic that it was actually real.

There are so many uses for FX and simulation, but one of the main ones is to create the look of a scene. You’ll see movies like Avatar where the environment is completely simulated and you never question the reality of world of Pandora.

Lighting and Rendering a Scene

You’ve heard of lighting, right? The “flickering light bulbs” on the ceiling in your living room? Well, lighting is the light source in a shot. And when you add a light source to a shot, you need to render the scene.

Rendering is the process of taking images and objects from a computer screen into the 3D space of a virtual reality world. Lighting and rendering in visual effects are used to make objects look more realistic.

Lighting and rendering in visual effects are also used to add depth to images and create special effects such as glowing faces and eyes.

The first step is lighting. If you don’t have an accurate model of the environment, it’s impossible to render a realistic image.

The second is rendering, which involves applying shadows, colors, and textures. In the third step, the rendered image is sent back to the camera and then put into the scene for the final product.

So how do they get that? They use software called RenderMan. RenderMan is a collection of programs that allow artists to create a digital model of a scene, then tweak the model with lighting and other effects, and render that into a movie file.

Digital Compositing: The Unsung Heroes of VFX

Digital compositing is a process used in visual effects for special effects in movies. It is often seen in scenes where there is some action or special effects happening.

This is done by combining various images together in a seamless way. A lot of work has gone into making these seamless transitions. The key is to make sure the images are aligned properly and also making sure that they don’t move, which can be tricky at times.

Compositing involves using software and hardware to combine images together.

It can be done manually or with automation. The software is used to blend two images together to make them look as if they were one. The hardware is the part that actually makes the image transition possible.

This hardware can include a green screen or a studio backdrop. This allows the image to be blended seamlessly. This is usually done with an array of lights that are used to project light onto the backdrop.

This makes it easier for the software to blend the image together and make it seamless. There are many different ways to composite images together.

Green Screen vs Blue Screen?

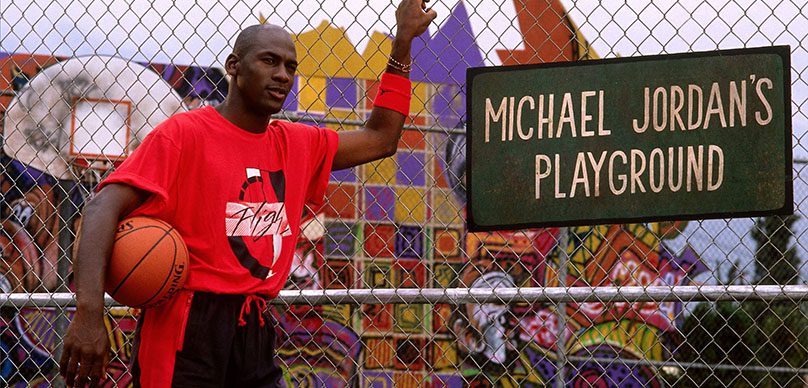

Green screen refers to the part of a movie set where a background is used, usually for visual effects. In live-action movies, the green screen background is often painted on a special stage, while in animation, computer graphics or 3D movies, the image can be projected onto a blue screen.

Blue screen refers to the black backdrop that is used to hide anything that would be distracting, such as people, lights, or other objects. It’s used in both live-action and animated movies, but its function is much more important in live action.

The blue screen can be built into a set or used as a portable screen that fits over existing sets or actors’ faces.

Down the Rabbit Hole

I hope you have enjoyed your journey down the rabbit hole of visual effects. In conclusion, for VFX movies, the entire look of the movie is created using computers. Special effects are used to create a virtual image of what is happening in a scene in a movie.

The goal is to create a new image that was not possible before the development of VFX techniques/ When a filmmaker makes a movie, they have to tell a story in a limited amount of time.

It’s not always possible for a director or cinematographer to tell a story without using some sort of visual effects. VFX can include things like creating a new setting, changing an object, or removing something.