Film noir is a term in filmmaking that’s used predominantly to refer to classy Hollywood crime dramas, mainly the ones that emphasize sexual motivations and cynical attitudes. The Hollywood classic film noir era was said to have extended from the early 1940s to the late 50s.

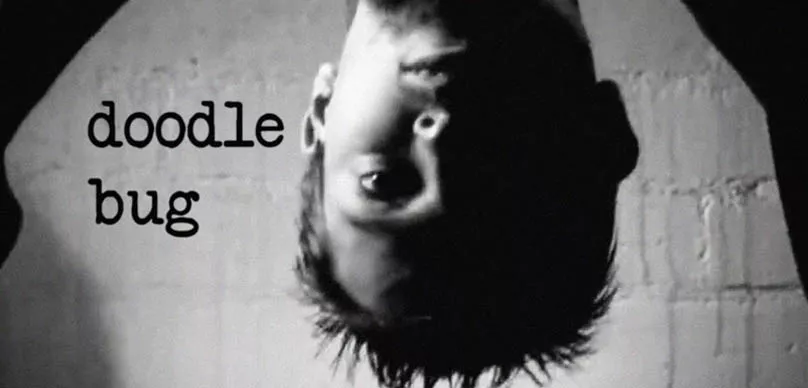

During this period, Film Noir was associated with a discreet, Black and White graphic style that has roots in the German Expressionist film making techniques.

It is also a French term which means, “black film,” or film of the night, which was inspired by the Series Noir; a brand of cheap paperbacks that transformed hard-boiled American crime fiction authors, and discovered a modern audience in France.

If you’re confused and believe that every black and white movie that you’ve seen is Film Noir, the following features would clarify it for you;

• It’s a film which at no particular time misleads its audience into believing that there’s going to be a happy ending.

• They usually use locations that reek of the night or have shadows everywhere, show lots of alleys, apartment buildings with high turnover rates, and bartenders and taxi drivers that have seen it all.

• The women in it would just as soon kill you as they’d love you, and vice versa.

• Almost everyone in Noir films is always smoking; as if they’re trying to convey the message to the audience that says, “Added to everything else I’m supposed to do, I’ve been consigned to finish three packs of cigarette today.” ‘Out of the Past’ was deemed the greatest smoking movie of all time, in which Robert Mitchum and Kirk Douglas were seen smoking furiously at each other.

• For women, the following were the order of the day; wearing floppy hats, mascara, lipsticks, high heels, red dresses, elbow-length gloves, showing low necklines. Calling doormen by their first names, having gangster boyfriends, mixing drinks, developing soft spots for alcoholic private detectives, and last but not the least, sprawling dead on the ground with every hair in place and all the limbs meticulously arranged.

• And for the men, the following were the order of the day; wearing suits and ties, fedoras, staying in shabby residential hotels with neon signs blinking through the window, having cars with running boards.

Going to all-night diners, being on first-name basis with almost all the homicide detectives in the city, protecting kids from the bad guys, knowing a lot of people with job descriptions that end in “ies,” like cabbies, junkies, bookies, alkies, jockeys, and Newsies.

• The relationships usually depicted in these movies portray love as only the final flop in the poker game of death.

During the World War II era and afterward, audiences responded to this adult-oriented kind of film simply because it was vivid and fresh. Shortly after this, a lot of writers, directors, actors, and cameramen joined the trend because they were eager to a more mature and world-view to Hollywood.

This situation was fueled by the artistic and financial success of Double Indemnity; Billy Wilder’s adaptation of James M. Cain’s Novella of the same name. Following the success of the movie, several studios started pumping out murder dramas and crime thrillers with a uniquely dark and poisonous view of existence.

Very few of the artists that created movies that fit the description of a Noir film ever called it such at that time. But in later years this theme of film-making proved to be hugely influential, both among industry peers and future generations of literary and cinematic storytelling.

Film Noir managed to portray issues like; vivid co-mingling of lost innocence, desperate desire, hard-edged cynicism, shadowy sexuality, and doomed romanticism among other things.

Until this day there’s still a raging debate on whether Noir is a distinct film genre, defined by its contents, or a brand of storytelling recognized by its visual attributes. And since there is no right or wrong answer for this debate, the genre is always kept alive and fresh for subsequent generations of film lovers.

A lot of individuals dislike watching Black and White movies and prefer the colored ones; forgetting the fact that a lot of the most famous movies are in black and white. Also, these types of movies can not only do just as much as the colored ones but could even appear better.

Film Noir is the best place to prove this point. This style of filmmaking continues to influence modern cinematography everywhere, including Breaking Bad. In some cases, comic strips are the real embodiment of this cinematic theme.

The dynamic range of shading in black and white films makes for a more interesting composition of shots. And it also depicts how this particular color can make for an interesting look with higher contrast.

The simplicity of black and white means that the eye can absorb more key features in a shot without getting distracted by other images. While color is great, sometimes simple is better.

In the era of color, film-makers trying to depict that Noir feel to their movies have two options; the first is to mimic noir but in colors. They can blast the screen with bright, vibrant colors to give it the same level of contrast and aesthetics as black and white movies.

Or the second option which is, filming in colors, but then making it so monochromatic that it almost looks black and white.

Black and White filming accentuate contrast, so it can be used to emphasize visual storytelling. A lot of colored films these days subtly make use of the Noir style. Some scenes in the Breaking Bad can be mistaken for some old Film Noir flicks.

And a lot of its gorgeous cinematography owes itself to the techniques that shine strongest in black and white movies. You can even find several film noir lightening everywhere in the series, starting from the blinds, the good side and the bad side, high contrast, and smoking. Smoke looks incredibly gorgeous in Black and White films.

The movie, ‘The Big Combo,’ shows all the beauty of black and white in a specific scene where silhouettes, smokes and high contrasts were depicted in the shot.

They Live by Night

This astonishingly self-confident, poetic debut of Nicholas Ray’s film opens with lush illustrations of a guy and girl in blissful mutual absorption. These characters were never introduced properly to the world. All we see is their shocked faces turned toward the camera when a loud horn suddenly goes off, obliterating every other sound. Shortly after that, the title appears: They Live by Night.

Kiss Me Deadly

This movie which is a black-hearted apotheosis of Noir is an essential film of the 50s, which embodies the most profound anxieties of Eisenhower’s America. Its ending depicts the detonation of a nuclear bomb on Malibu Beach, which then presumably leads to the end of the world. The moral universe created by Robert Aldrich is so violently out of balance that even the opening credit scene is shown upside down. While the protagonist of the movie, Mike Hammer is an amoral, proto-fascist bedroom investigator, and scumbag, the villains are a hundred times worse than him. Hammer is a cynic who’s aware of everything concerning human weakness, but nothing regarding the frame he’s in, at the end of the movie.

Blood Simple

This film is perhaps the most straightforward film of the Coen brothers; even though it’s ironically not that simple at all. It takes its atmospheric title coined from a line in the novel Red Harvest by Dashiell Hammett. The film can be referred to as a sort of preparation for their breakout work, Fargo, which similarly features a plot where an evil plan goes sideways. It also marked the first use of literary genre elements in the “real” world. Quentin Tarantino eventually refined this formula. And just like in most noir films, the basis of the story is a case of Cherchez la femme.

Lift to the Scaffold

This action film by Louis Malle was based Noel Calef’s 1956 novel. While it heralds the imminent arrival of the French new wave, it still qualifies as film noir for its appropriation of US postwar cinema in its portrayal of lovers gone corrupt.

The Third Man

The legendary Steven Soderbergh once wrote about this movie, and I quote, “One of the remarkable things about the film is that it’s a great story regardless of what people say about it.” And he was right; The Third Man is one of the greatest, wittiest, steadfastly compassionate, and elegantly shot thriller in time. The movie is about more than its plot. Betrayal, disillusion and misdirected sexual longing are a few of my favorite things, and The Third Man blends them all impeccably with an unquestionable plot and a location that blurs the line between decay and beauty.